What is the Agentforce Testing Center?

The Agentforce Testing Center is a Salesforce tool that enables admins and developers to validate and ensure the reliability of AI agents built with Agentforce before deploying them to production. It provides a framework for creating, managing, and running automated tests that simulate real-world user interactions and conversation scenarios.

Testing Center supports batch testing, evaluation of expected topics and actions, and comparison of expected versus actual responses to validate agent logic, intent handling, and action execution.

Why Testing Custom Agents is Important

AI agents are designed to interpret utterances (user inputs) and select appropriate topics and actions; however, AI responses can vary based on context, phrasing, and conversation history. This makes testing crucial because:

- It validates that an agent behaves as expected across diverse scenarios.

- It ensures correct action execution and topic selection.

- It prevents unexpected responses or mis-routed actions in production.

- It builds confidence that the agent meets business requirements and handles edge cases reliably.

Testing early and often helps refine guardrails, improve conversational quality, and deploy trustworthy agents.

Overview of the Testing Center Workflow

The Testing Center workflow generally includes:

- Access the Testing Center – Navigate to the Testing Center from Setup or Agentforce Builder.

- Set Up Test Criteria – Create a new test and configure basic settings.

- Create or Upload Test Cases – Define test cases manually via CSV or generate them with AI.

- Select Test Criteria – Review and finalize the test scenarios.

- Run Your Test Suite – Execute the tests in batch.

- Review and Analyze Results – Evaluate outcomes and iterate.

Step-by-Step: Using the Testing Center

1. Access the Testing Center

To open the Testing Center:

- From Setup, search for and select Testing Center.

- Alternatively, from Agentforce Builder, click the Batch Test button above the Conversation Preview panel — this takes you directly to the Testing Center UI.

This acts as a central hub where you can define, upload, and run tests for any agent available in your org.

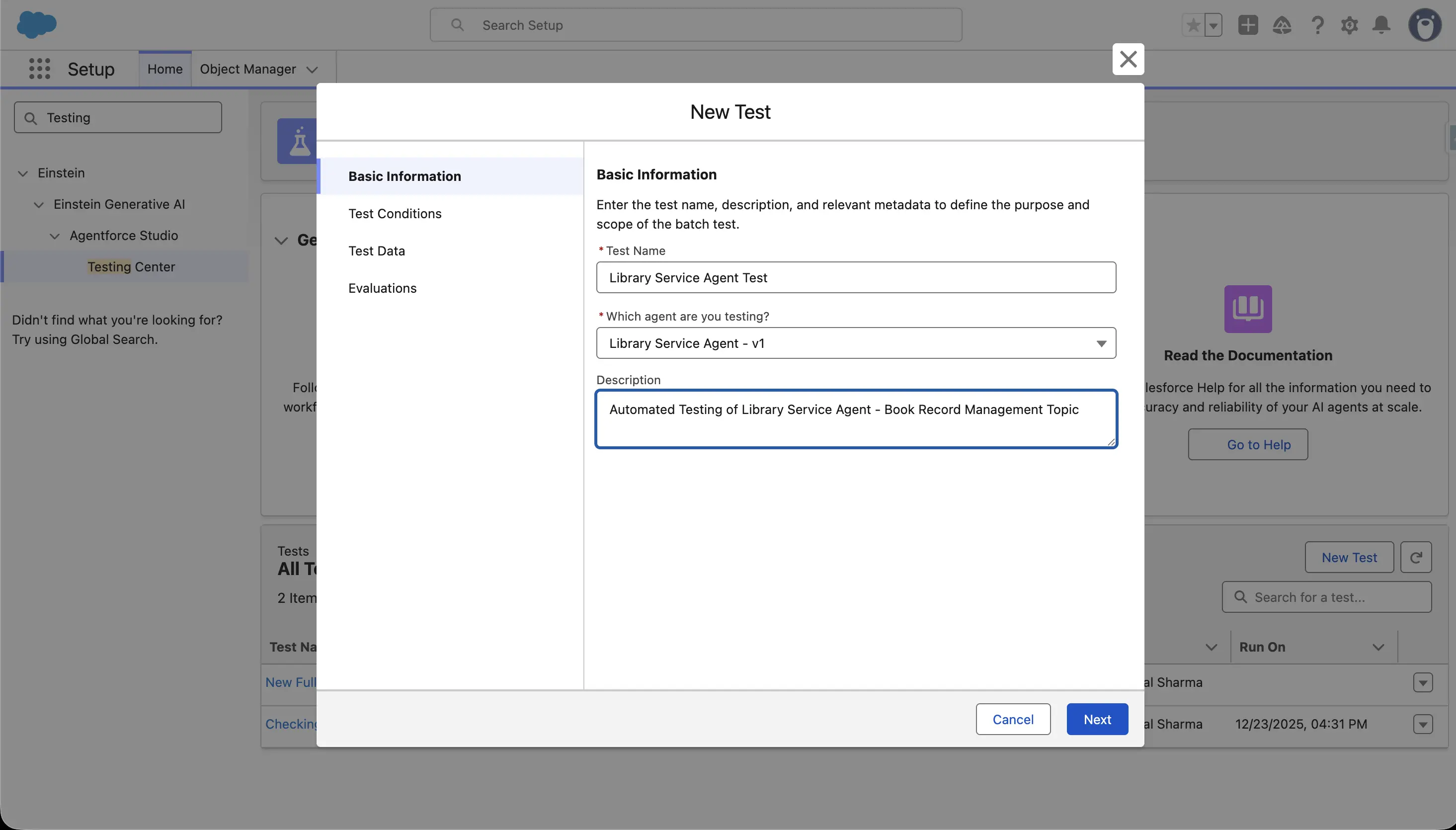

2. Set Up Test Criteria

Once in the Testing Center:

- Click New Test.

- Enter a Test Name and select the agent you want to test.

- Provide an optional description that explains what this test verifies — for example: “Test customer case lookup agent responses”.

- Proceed to the next step to upload or generate test scenarios.

3. Create or Upload Test Cases

There are two main ways to define test cases:

A. Manual CSV Creation

- Use the Testing Center CSV template, which includes columns such as:

- Utterance – the input text (question or request)

- Expected Topic – the topic the agent should trigger

- Expected Actions – the list of actions the agent should run

- Expected Response – the desired agent output description

- Save your test cases as a CSV file and upload it in the Testing Center.

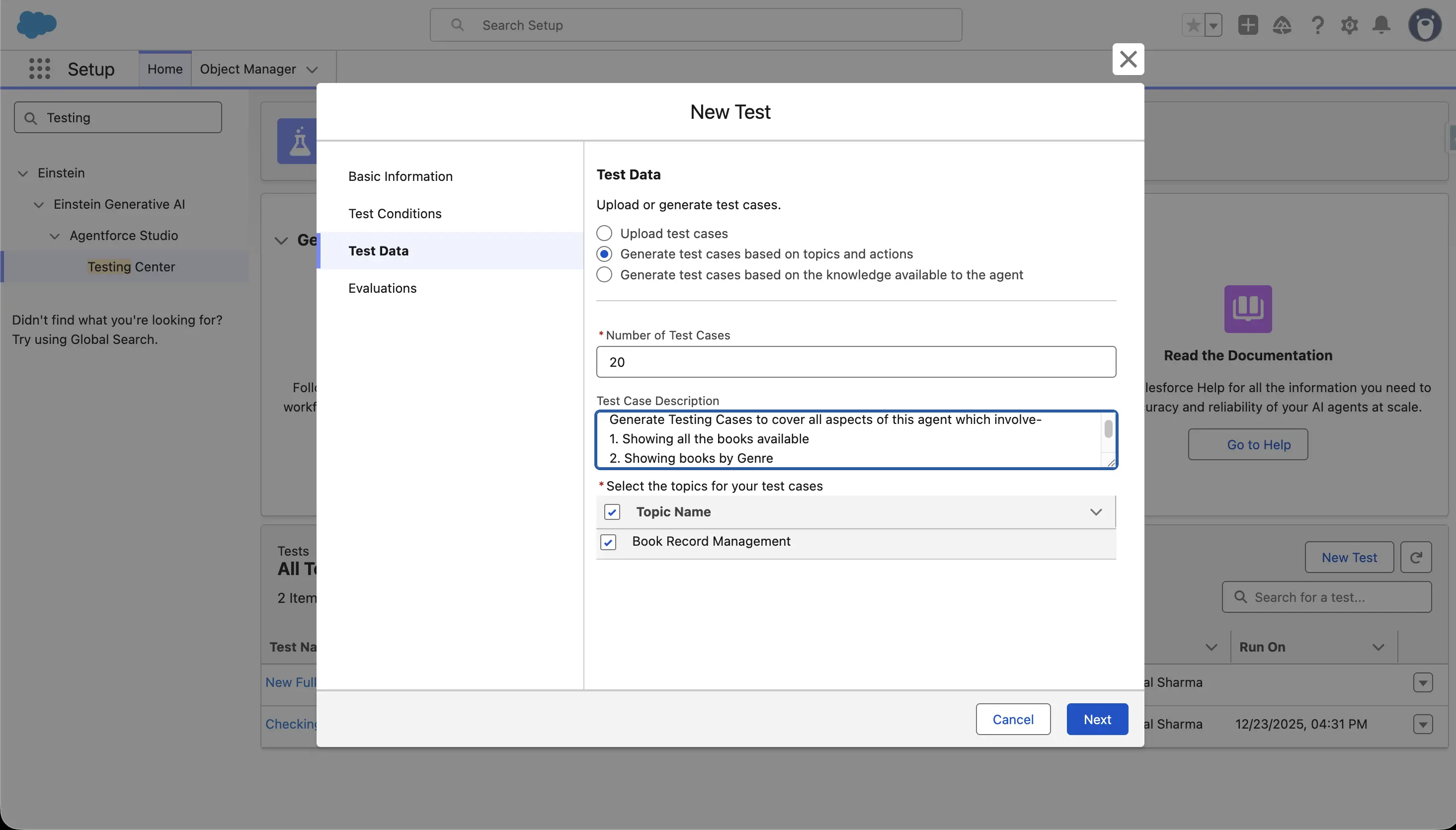

B. AI-Generated Test Cases

- Instead of writing each test manually, use the Testing Center’s AI test generation feature to automatically generate a set of diverse utterances based on agent topics and expected behaviors.

This helps simulate large volumes of realistic user interactions quickly.

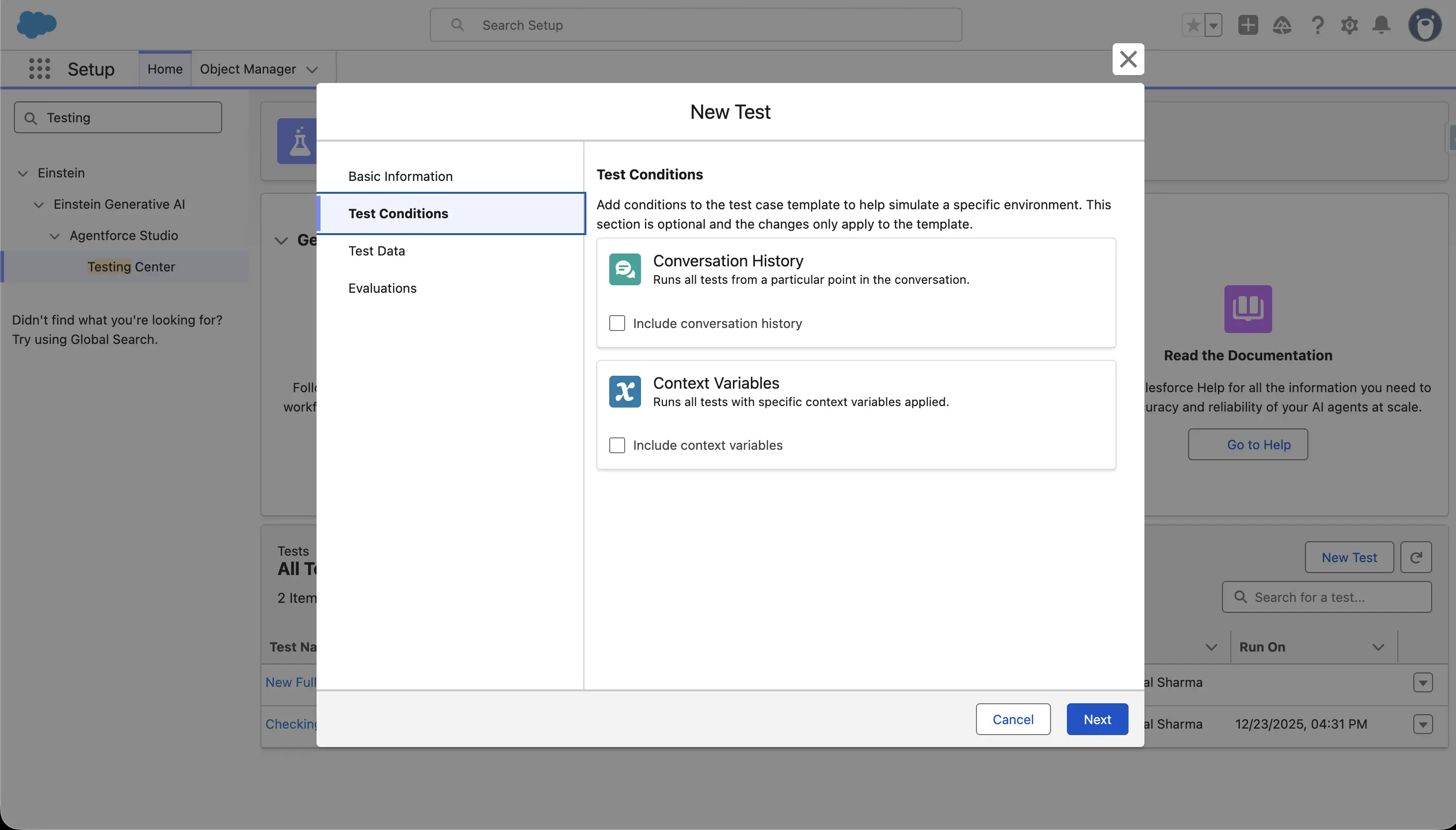

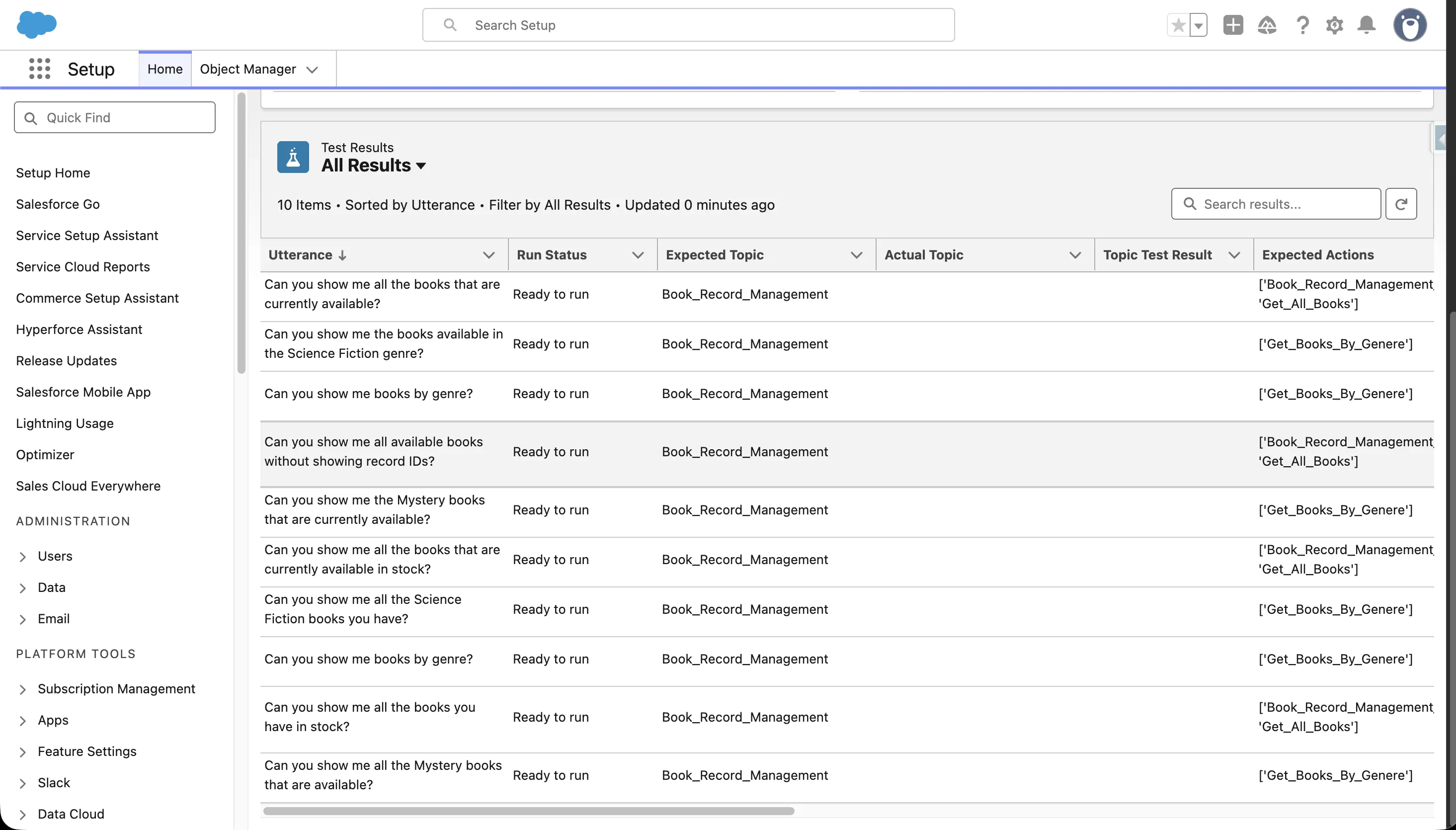

4. Select Test Criteria

After uploading or generating test cases, review and select the appropriate test criteria. This involves verifying that the test scenarios align with your agent's intended behavior, including checking utterances, expected topics, actions, and responses to ensure comprehensive validation.

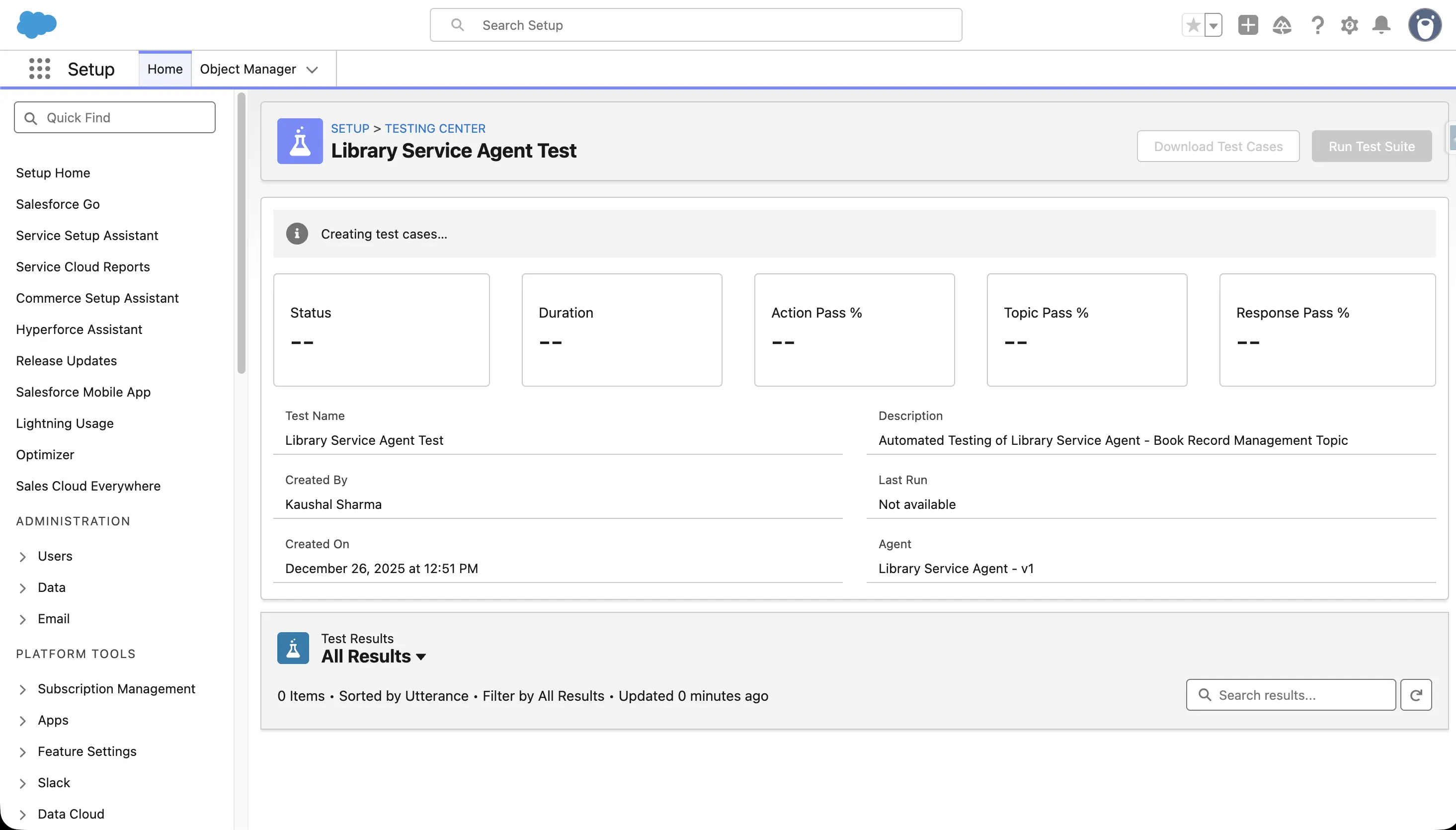

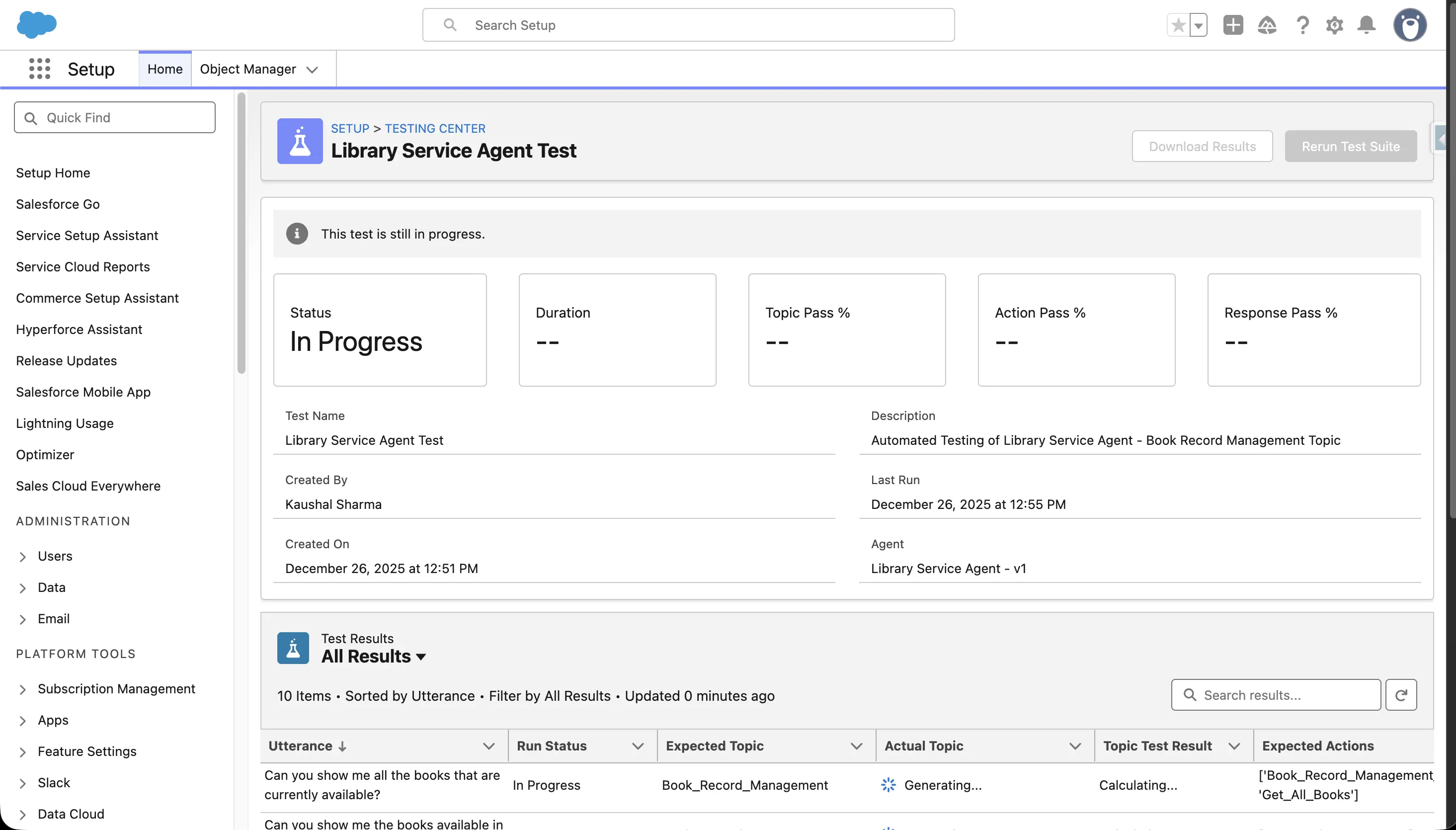

5. Run Your Test Suite

After uploading test cases or generating them:

- Click Run Test Suite.

- The Testing Center executes all test cases in parallel, simulating user inputs (utterances) against the agent’s logic.

- It then compares expected results (topic, actions, responses) with actual results.

6. Review and Analyze Results

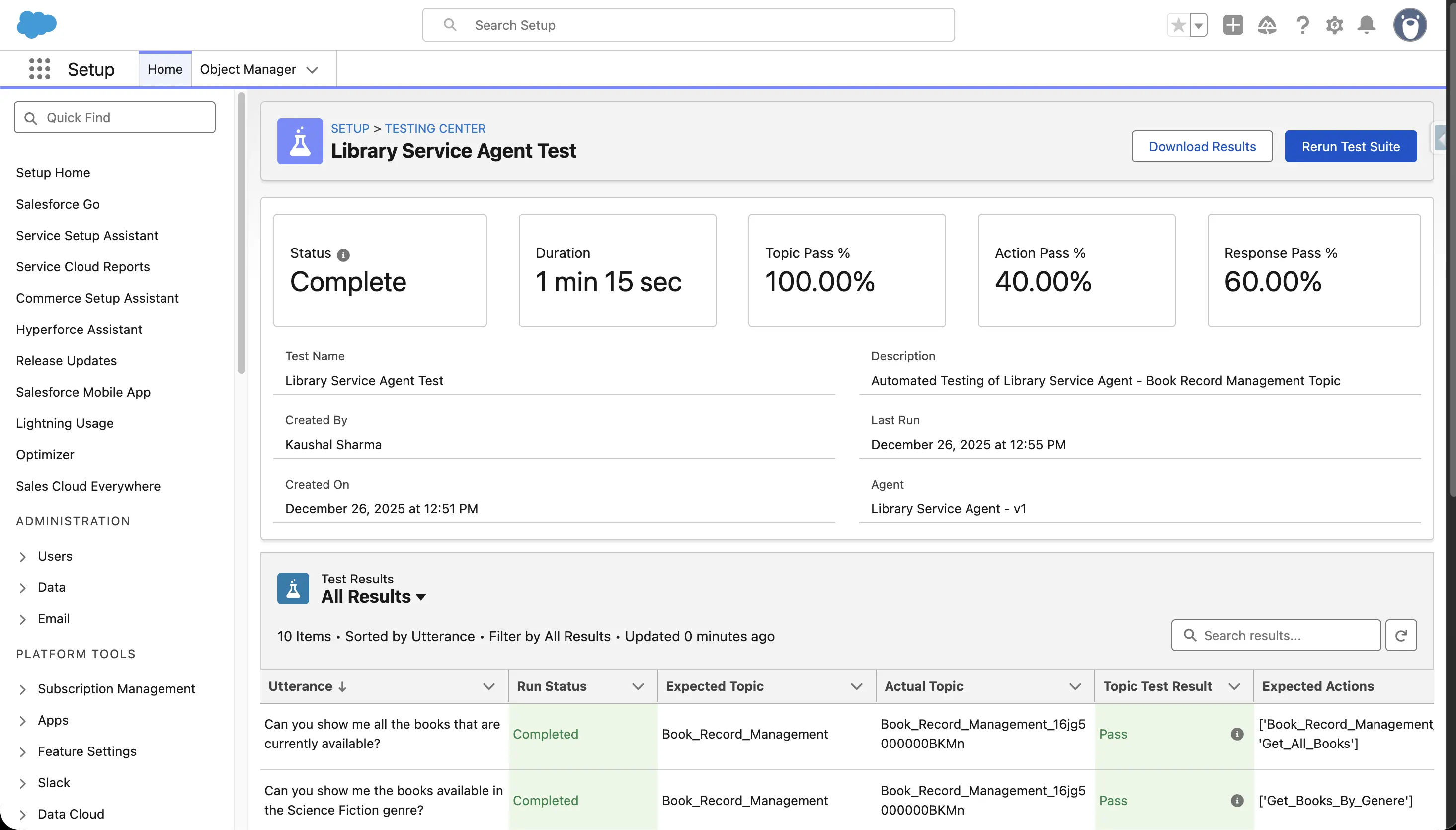

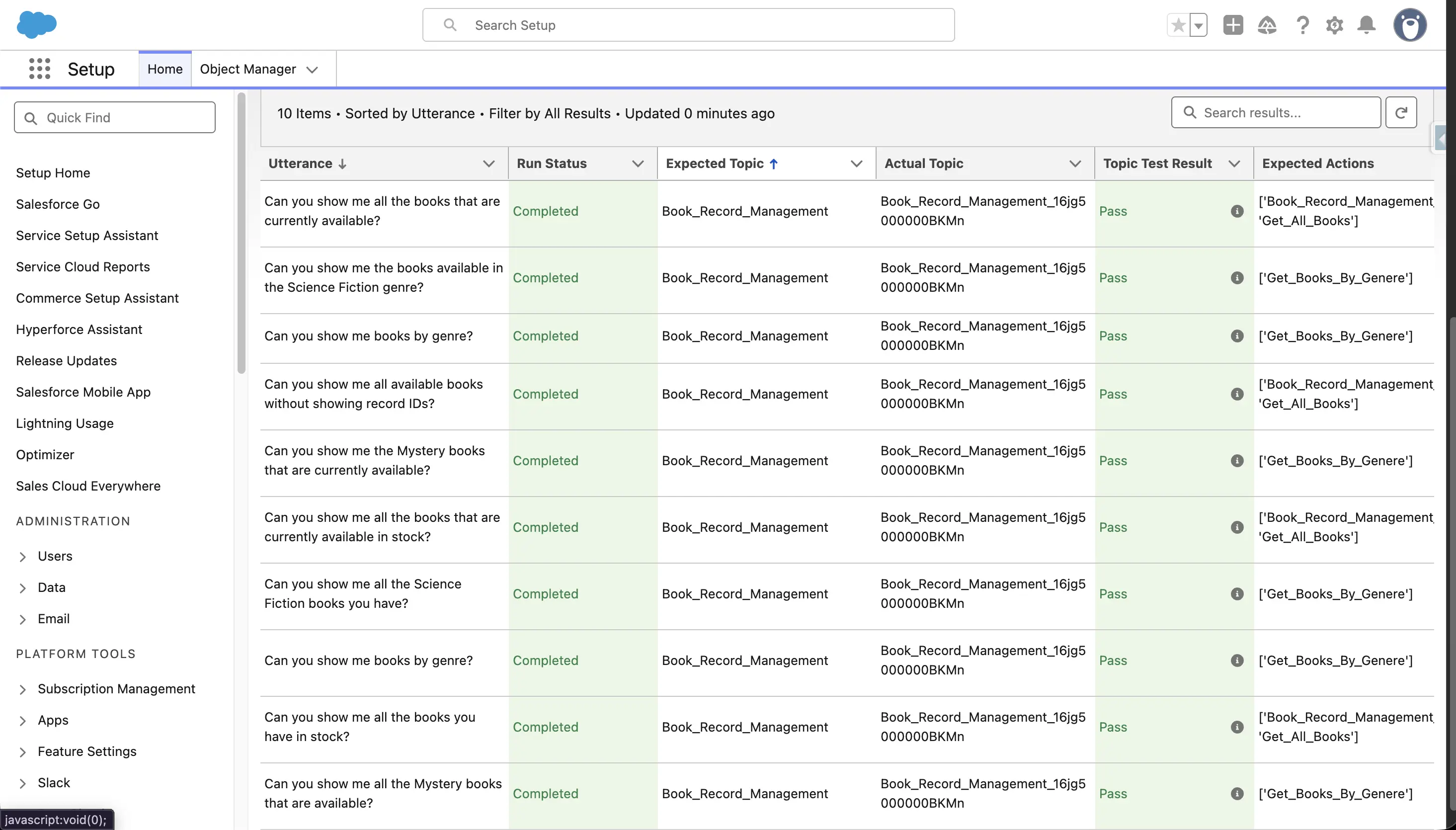

Once the tests run:

- You will see which test cases passed and which failed.

- The Testing Center shows both the expected and actual topics, actions, and responses.

- For failed cases, you can refine your agent’s instructions, topics, or guardrails in Agentforce Builder and re-run tests.

This is effectively a test-driven development (TDD) approach for AI agents — write tests first, then update the agent until all tests reliably pass.

Best Practices for Agent Testing

To ensure robust and trustworthy AI agents:

- Start with a diverse test dataset covering normal and edge cases.

- Use both manual and AI-generated tests for comprehensive coverage.

- Test frequently as you update agent logic or prompt templates.

- Run tests in sandbox environments to avoid unintended CRM data changes.

- Iterate on results by adjusting topics, actions, or guardrails based on failures.

Conclusion

The Salesforce Agentforce Testing Center is essential for validating custom AI agents before deployment. It reduces manual effort through batch testing and AI-generated scenarios, giving development teams the confidence to deploy agents that behave as designed. By defining rich test criteria, running test suites, and analyzing results, you can catch issues early, fine-tune agent logic, and deliver reliable, production-ready AI experiences.

References:

1. Agentforce Testing Center overview

2. Agentforce Testing Center module

3. Use AI to Generate Tests unit

4. Understanding Trust in Testing Center

5. View Test Results